02.Bayesian networks(W3)

02.Bayesian networks(W3)

Bayesian networks: examples, definition

Details

:::

Bayesian networks

What is a BN?

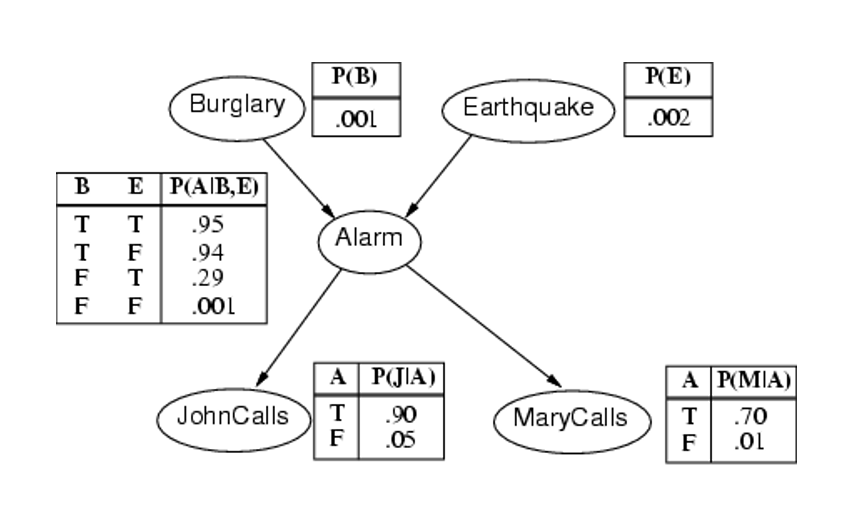

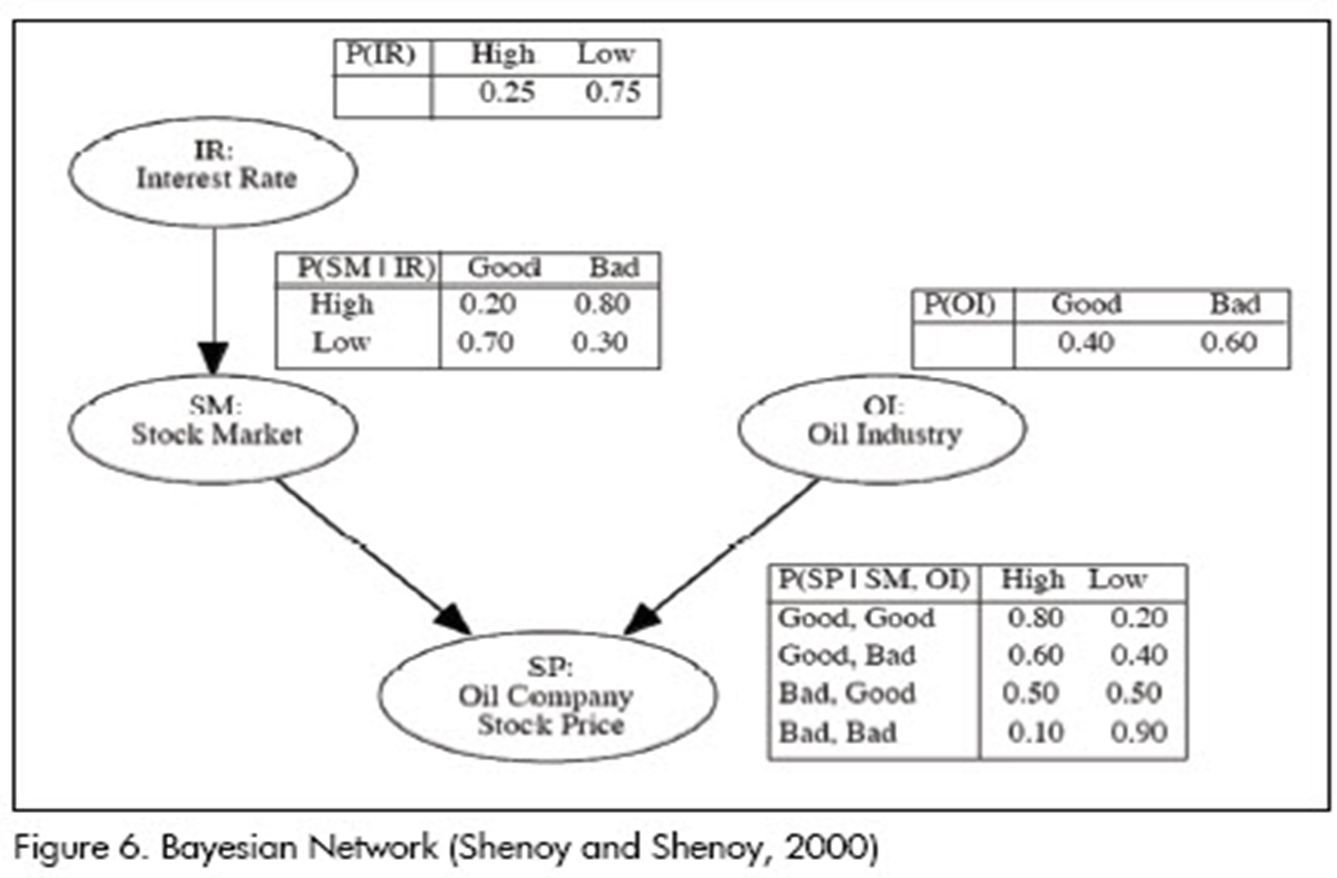

A Bayesian network (BN) is a graphical model for depicting probabilistic relationships among a set of variables.

- BN Encodes the conditional independence relationships between the variables in the graph structure.

- Provides a compact representation of the joint probability distribution over the variables

- A problem domain is modeled by a list of variables

- Knowledge about the problem domain is represented by a joint probability

- Directed links represent causal direct influences

- Each node has a conditional probability table quantifying the effects from the parents.

- No directed cycles

Two important properties of Bayesian Networks

- Encodes the conditional independence relationships between the variables in the graph structure

- Is a compact representation of the joint probability distribution over the variables

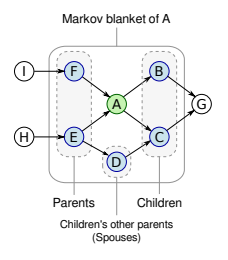

What is Markov blanket

The set of nodes that graphically isolates a target node from the rest of the DAG is called its Markov blanket and includes:

- its parents;

- its children;

- other nodes sharing a child.

Since

References

Components of A Bayesian Network

A Bayesian Network is consisted with two components

- A directecd Acyclic Graph

Characteristics

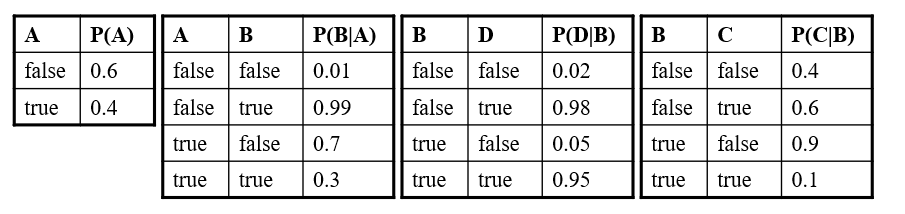

- Each node in the graph is a random variable

- A node X is a parent of another node Y if there is an arrow from node X to node Y

eg. A is a parent of B - Informally, an arrow from node X to node Y means X has a direct influence on Y

- A set of tables for each node in the graph

Characteristics

- Each node

- The parameters are the probabilities in these conditional probability tables (CPTs)

- Each node

Bayesian Network Example

Using the network in the example, suppose you want to calculate:

Details for data refer to here.

Conditional Independence

The Markov condition: Given its parents (P1, P2), a node (X) is conditionally independent of its non-descendants (ND1, ND2).

The Joint Probability Distribution

Due to the Markov condition, we can compute the joint probability distribution over all the variables

Info

Where

Bayesian network inference

Using a Bayesian network to compute probabilities is called inference

In general, inference involves queries of the form:

E: The evidence variable(s)

X: The query variable(s)Diagnostic (evidential, abductive): from effect to cause

- P(Buglary|JonhCalls), P(B|J)=0.016

- P(B|J,M)=0.29

- P(A|J,M)=0.76

Causal (predictive): From cause to effect

- P(J|B)=0.86

- P(M|B)=0.67

Intercausal (explaining away): common effect

- P(B|A)=0.38

- P(B|A, E)=0.003

Mixed: two or more of the above combined

- P(A|J,E’)=0.03

- P(B|J,E’)=0.017

In general, the problem of Bayesian network inference is NP-hard (exponential in the size of the graph)

Exact inference

- Probability and Markov condition

- Variable elimination

- Clustering / join tree algorithms

Approximate inference

- Stochastic simulation / sampling methods

- Markov chain Monte Carlo methods

- Genetic algorithms

- Neural networks

- Simulated annealing

- Mean field theory

Marginalisation:

e.g.Markov condition:

Diagnostic (evidential, abductive): from effect to cause

- Intercausal (explaining away): common effect

Summary

Bayesian networks

- Definition

- Properties: Markov condition, compact joint probability

Bayesian networks inference

- Understand how to use probability theory and Markov condition to do the inference for different types of queries

- In practice, we are going to use R (gRain package)

Next week, learning Bayesian networks from data

References

- Naive Bayes Classifier

Resource from Youtube

- Week 3 Slides from Thuc (SP52023)