01.Naïve Bayes Classifier

01.Naïve Bayes Classifier

Background

There are three methods to establish a classifier

A. Model a classification rule directly

B. Model the probability of class memberships given input data

C. Make a probabilistic model of data within each class

Examples

| Method | discriminative classification | generative classification | probabilistic classification | Examples |

|---|---|---|---|---|

| A | Yes | No | No | k-NN, decision trees, perceptron, SVM |

| B | Yes | No | Yes | perceptron with the cross-entropy cost |

| C | No | Yes | Yes | naive Bayes, model based classifiers |

Probability Basics

Prior, conditional and joint probability for random variables

- Prior probability:

- Conditional probability:

- Joint probability:

- Relationship:

- Independence:

Bayes Rule (Bayesian Inference)

Basic Idea: We got a data

Quiz

We have two six-sided dice. When they are tolled, it could end up with the following occurance:

A. dice 1 lands on side "3"

B. dice 2 lands on side "1"

C. Two dice sum to eight

We set the probabilities:

a. dice 1:

b. dice 2:

c. dice 3:

Answer the following Questions:

- Is

Answer: Yes

Probabilistic Classification

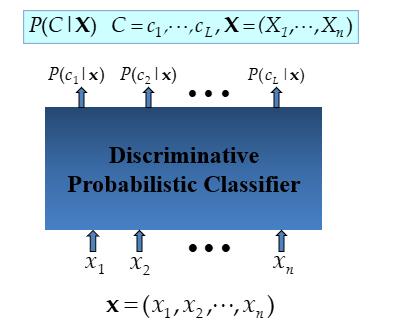

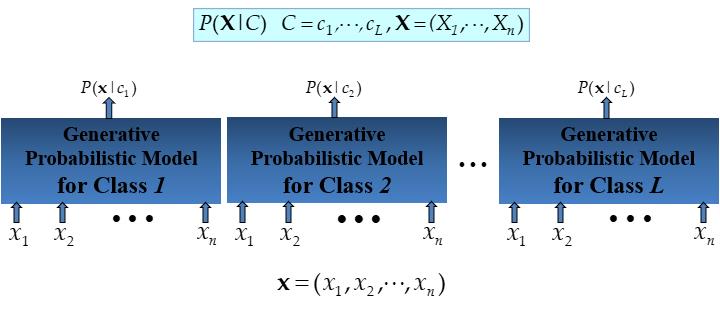

Establishing a probabilistic model for classification

Discriminative model

Generative model

MAP classification rule

- MAP: Maximum A Posterior

- Assign

Generative classification with the MAP rule

- Apply Bayes rule to convert them into posterior probabilities

Naïve Bayes

- Bayes classification

Difficulty: learning the joint probability - Naïve Bayes classification

Assumption that all input features are conditionally independent!

MAP classification rule: for

- Algorithm: Discrete-Valued Features

- Learning Phase: Given a training set S,

For each target value of

for every feature value

Output: conditional probability tables; for - Test Phase: Given an unknown instance

Look up tables to assign the label

- Learning Phase: Given a training set S,

Examples: Discrete-Valued Features

Original Data

| Day | Outlook | Temperature | Humidity | Wind | PlayTennis |

|---|---|---|---|---|---|

| D1 | Sunny | Hot | High | Weak | No |

| D2 | Sunny | Hot | High | Strong | No |

| D3 | Overcast | Hot | High | Weak | Yes |

| D4 | Rain | Mild | High | Weak | Yes |

| D5 | Rain | Cool | Normal | Weak | Yes |

| D6 | Rain | Cool | Normal | Strong | No |

| D7 | Overcast | Cool | Normal | Strong | Yes |

| D8 | Sunny | Mild | High | Weak | No |

| D9 | Sunny | Cool | Normal | Weak | Yes |

| D10 | Rain | Mild | Normal | Weak | Yes |

| D11 | Sunny | Mild | Normal | Strong | Yes |

| D12 | Overcast | Mild | High | Strong | Yes |

| D13 | Overcast | Hot | Normal | Weak | Yes |

| D14 | Rain | Mild | High | Strong | No |

Learning Phase

| Outlook | Play = Yes | Play = No |

|---|---|---|

| Sunny | ||

| Overcast | ||

| Rain |

| Temperature | Play = Yes | Play = No |

|---|---|---|

| Hot | ||

| Mild | ||

| Cool |

| Outlook | Play = Yes | Play = No |

|---|---|---|

| High | ||

| Normal |

| Outlook | Play = Yes | Play = No |

|---|---|---|

| Strong | ||

| Weak |

Test Phase

Given a new instance, predict its label

Look up tables achieved in the learning phrase

Decision making with the MAP rule

Final Result

The fact

Algorithm: Continuous-valued Features

- Numberless values for a feature

- Conditional probability often modeled with the normal

- Learning Phase:

for

Output: - Test Phase: Given an unknown instance

- Instead of looking-up tables, calculate conditional probabilities with all the normal distributions achieved in the learning phrase

- Apply the MAP rule to make a decision

Example: Continuous-valued Features

Data

Temperature is naturally of continuous value.

Yes: 25.2, 19.3, 18.5, 21.7, 20.1, 24.3, 22.8, 23.1, 19.8

No: 27.3, 30.1, 17.4, 29.5, 15.1Estimate mean and variance for each class

According to the formula above, the result could be get

Learning Phase

output two Gaussian models for P(temp|C)

Relevant Issues

- Violation of Independence Assumption

- For many real world tasks,

- Nevertheless, naïve Bayes works surprisingly well anyway!

- For many real world tasks,

- Zero conditional probability Problem

- If no example contains the attribute value

- In this circumstance, $$ during test

- For a remedy, conditional probabilities estimated with

Summary

- Naïve Bayes: the conditional independence assumption

- Training is very easy and fast; just requiring considering each attribute in each class separately

- Test is straightforward; just looking up tables or calculating conditional probabilities with estimated distributions

- A popular generative model

- Performance competitive to most of state-of-the-art classifiers even in presence of violating independence assumption

- Many successful applications, e.g., spam mail filtering

- A good candidate of a base learner in ensemble learning

- Apart from classification, naïve Bayes can do more…

Explanation from Thuc

References

- Naive Bayes Classifier

Resource from Youtube

- Week 2 Slides from Thuc (SP52023)