This article is copy the online paper content and make some notes for better understanding. Please Download PDF Paper here.

The Development of an Instrument for Longitudinal Learning Diagnosis of Rational Number Operations Based on Parallel Tests

Paper Notes

The precondition of the measurement of longitudinal learning is a high-quality instrument for longitudinal learning diagnosis. This study developed an instrument for longitudinal learning diagnosis of rational number operations. In order to provide a reference for practitioners to develop the instrument for longitudinal learning diagnosis, the development process was presented step by step. The development process contains three main phases, the Q-matrix construction and item development, the preliminary/pilot test for item quality monitoring, and the formal test for test quality control. The results of this study indicate that

- (a) both the overall quality of the tests and the quality of each item are good enough and that

- (b) the three tests meet the requirements of parallel tests,

which can be used as an instrument for longitudinal learning diagnosis to track students’ learning.

Key Information

Three main phases of this report:

- Q-matrix construction and item development,

- the preliminary/pilot test for item quality monitoring, and

- the formal test for test quality control.

Introduction

In recent decades, with the development of psychometrics, learning diagnosis (Zhan, 2020b) or cognitive diagnosis (Leighton and Gierl, 2007), which objectively quantifies students’ current learning status, has drawn increasing interest. Learning diagnosis aims to promote students’ learning according to diagnostic results which typically including diagnostic feedback and interventions. However, most existing cross-sectional learning diagnoses are not concerned about measuring growth in learning. By contrast, longitudinal learning diagnosis evaluates students’ knowledge and skills (collectively known as latent attributes) and identifies their strengths and weaknesses over a period (Zhan, 2020b).

Key Information

The functions of longitudinal learning diagnosis

- evaluates students’ knowledge and skills

- identifies their strengths and weaknesses

A complete longitudinal learning diagnosis should include at least two parts: an instrument for longitudinal learning diagnosis of specific content and a longitudinal learning diagnosis model (LDM). The precondition of the measurement of longitudinal learning is a high-quality instrument for longitudinal learning diagnosis. The data collected from the instrument for longitudinal learning diagnosis can provide researchers with opportunities to develop longitudinal LDMs that can be used to track individual growth over time. Additionally, in recent years, several longitudinal LDMs have been proposed, for review, see Zhan (2020a). Although the usefulness of these longitudinal LDMs in analyzing longitudinal learning diagnosis data has been evaluated through some simulation studies and a few applications, the development process of an instrument for longitudinal learning diagnosis is rarely mentioned (cf. Wang et al., 2020). The lack of an operable development process of instrument hinders the application and promotion of longitudinal learning diagnosis in practice and prevents practitioners from specific fields to apply this approach to track individual growth in specific domains.

Key Information

Parts of a complete longitudinal learning diagnosis

- an instrument for longitudinal learning diagnosis of specific content

- a longitudinal learning diagnosis model (LDM)

Currently, there are many applications use cross-sectional LDMs to diagnose individuals’ learning status in the field of mathematics because the structure of mathematical attributes is relative clear to be identified, such as fraction calculations (Tatsuoka, 1983; Wu, 2019), linear algebraic equations (Birenbaum et al., 1993), and spatial rotations (Chen et al., 2018; Wang et al., 2018). Some studies also apply cross-sectional LDMs to analyze data from large-scale mathematical assessments (e.g., George and Robitzsch, 2018; Park et al., 2018; Zhan et al., 2018; Wu et al., 2020). However, most of these application studies use cross-sectional design and cannot track the individual growth of mathematical ability.

Key Information

Most of applications that using cross-sectional design cannot track the individual growth of mathematical ability.

In the field of mathematics, understanding rational numbers is crucial for students’ mathematics achievement (Booth et al., 2014). Rational numbers and their operations are one of the most basic concepts of numbers and mathematical operations, respectively. The fact that many effects are put into rational number teaching makes many students and teachers struggle to understand rational numbers (Cramer et al., 2002; Mazzocco and Devlin, 2008). The content of rational number operation is the first challenge that students encounter in the field of mathematics at the beginning of junior high school. Learning rational number operation is not only the premise of the subsequent learning of mathematics in junior high school but is also an important opportunity to cultivate students’ interest and confidence in mathematics learning.

The main purpose of this study is to develop an instrument for longitudinal learning diagnosis, especially for the content of rational number operations. We present the development process step by step to provide a reference for practitioners to develop the instrument for longitudinal learning diagnosis.

Key Information

Main purpose of this study: develop an instrument for longitudinal learning diagnosis, especially for the content of rational number operations.

Development of the Instrument for Longitudinal Learning Diagnosis

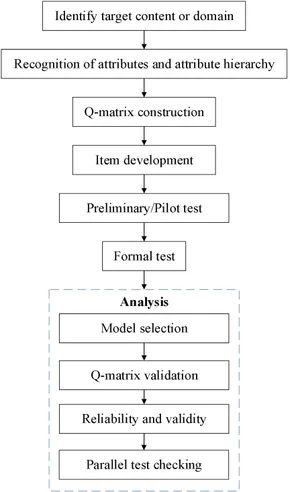

As the repeated measures design is not always feasible in longitudinal educational measurement, in this study, the developed instrument is a longitudinal assessment consisting of parallel tests. The whole development process is shown in Figure 1. In the rest of the paper, we describe the development process step by step.

Recognition of Attributes and Attribute Hierarchy

The first step in designing and developing a diagnostic assessment is recognizing the core attributes involved in the field of study (Bradshaw et al., 2014). In the analysis of previous studies, the confirmation of attributes mainly adopted the method of literature review (Henson and Douglas, 2005) and expert judgment (Buck et al., 1997; Roduta Roberts et al., 2014; Wu, 2019). This study used the combination of these two methods.

Key Information

Preoperation: Recognizing the core attributes involved in the field of study

Previous Methods on confirmation of attributes:

- literature review

- expert judgment

Current Methods on confirmation of attributes

Combination of the previous two methods

First, relevant content knowledge was extracted according to the analysis of mathematics curriculum standards, mathematics exam outlines, teaching materials and supporting books, existing provincial tests, and chapter exercises. By reviewing the literature, we find that the existing researches mainly focus on one or several parts of rational number operation. For example, fraction addition and subtraction is the most involved in existing researches (e.g., Tatsuoka, 1983; Wu, 2019). In contrast, it is not common to focus on the whole part of rational number operation in diagnostic tests. Ning et al. (2012) pointed out that rational number operation contains 15 attributes; however, such a larger number of attributes does not apply in practice.

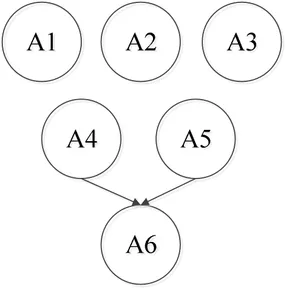

Second, according to the attribute framework based on the diagnosis of mathematics learning among students in 20 countries in the Third International Math and Science Study–Revised (Tatsuoka et al., 2004), the initial attribute framework and the corresponding attribute hierarchy (Leighton et al., 2004) of this study were determined after a discussion among six experts, including two frontline mathematics teachers who have more than 10 years’ experience in mathematics education, two graduate students majoring in mathematics, and two graduate students majoring in psychometrics (see Table 1 and Figure 2).

| Label | Attribute | Description |

|---|---|---|

| A1 | Rational number | Concepts and classifications |

| A2 | Related concepts of the rational number | Opposite number, absolute value. |

| A3 | Number Axis | Concept, number conversion, comparison of the size of numbers. |

| A4 | Addition and subtraction of rational numbers | Addition, subtraction, and addition operation rules. |

| A5 | Multiplication and division of rational numbers | Multiplication, involution, multiplication operation rule, division and reciprocat. Reduction of fractions to a common denominator |

| A6 | Mixed operation of rational numbers | First involution, then multiplication and division, and finally addition and subtraction; if there are numbers in parentheses, calculate the ones in the parentheses first. |

Info

A1 = rational number;

A2 = related concepts of rational numbers;

A3 = axis;

A4 = addition and subtraction of rational numbers;

A5 = multiplication and division of rational numbers;

A6 = mixed operation of rational numbers.

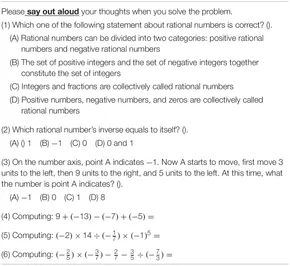

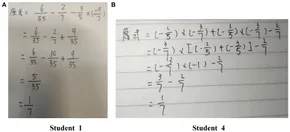

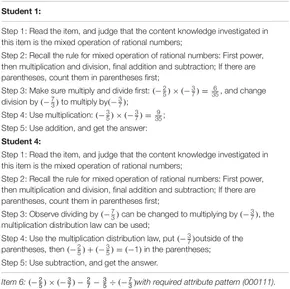

Third, a reassessment by another group of eight experts (frontline mathematics teachers) and the think-aloud protocol analysis (Roduta Roberts et al., 2014) were used to verify the rationality of the initial attribute framework and that of the corresponding attribute hierarchy. All experts agreed that the attributes and their hierarchical relationships were reasonable. In the think-aloud protocol analysis, six items were initially prepared according to the initial attribute framework and attribute hierarchy (see Table 2). Then, six seventh graders were selected according to above-average performance, gender balance, willingness to participate, and ability to express their thinking process (Gierl et al., 2008). The experimenter individually tested these students and recorded their responses; in the response process, the students were required to say aloud their problem-solving train of thought. Taking the responses of two students to item 6 as an example, Figure 3 and Table 3 present their problem-solving process and thinking process, respectively. Although different students used different problem-solving processes, they all used addition, subtraction, multiplication, and division to solve the items of the mixed operation of rational numbers. Therefore, mastering A4 and A5 are prerequisites to mastering A6, and they validate the rationality of the attribute hierarchy proposed by experts.

Please say out aloud your thoughts when you solve the problem.

- (1) Which one of the following statement about rational numbers is correct?

- (A) Rational numbers can be divided into two categories, positive rational numbers an negative rational numbers.

- (B) The set of positive integers and the set of negative integers together constitude the set of integers.

- (C) Integers and fractions are collectively called rational numbers.

- (D) Positive numbers, negative numbers, and Zeros are collectively called rationl numbers.

- (2) Which rational numbers inverse equals to itself?

- (A) 1 (B) -1 (C) 0 (D) 0 and 1

- (3) On the number axis, point A indicates - 1. Now A starts to move, first move 3 unites to the left, then 9 units to the right, and 5 units to the left. At this time, what the number is point A indicates?

- (A) -1 (B) 0 (C) 1 (D)8

- (4) Computing: 9+(-13)-(-7) + (-5) =

- (5) Computing:

- (6) Computing:

Info

In item 6,

Student 1:

Step 1: Read the item, and judge that the contnet knowledge investigatedd in this item is the mixed operation of rational numbers.

Step 2: Recall the rule for mixed operation of rational numbers; First power then multiplication and division, final addition and subtraction, if there are parentheses, count them in parentheses first.

Step 3: Make sure multiply and divide first:

Step 4: Use multiplication:

Step 5: Use addition and get the answer.

Finally, as presented in Table 1, the attributes of rational number operation fell into the following six categories: (A1) rational number, (A2) related concepts of rational numbers, (A3) axis, (A4) addition and subtraction of rational numbers, (A5) multiplication and division of rational numbers, and (A6) mixed operation of rational numbers. The six attributes followed a hierarchical structure (Figure 2), which indicates that A1–A3 are structurally independent and that A4 and A5 are both needed to master A6.

Q-Matrix Construction and Item Development

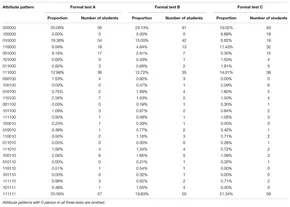

According to the attribute hierarchy, A4 and A5 are both needed to master A6. Therefore, the attribute patterns that contain A6 but lack either A4 or A5 are unattainable. Theoretically, there are 40 rather than

Key Information

- 40 attainable attribute patterns.

- Factors for contructing the q-matrix

- the Q-matrix contains at least one reachability matrix for completeness

- each attribute is examined at least twice

- the test time is limited to a teaching period of 40 min to ensure that students have a high degree of involvement.

Test length: 18

- 12 multiple-choice items

- 6 calculation items

- 4-5 items for each of the 18 attribute patterns.

- There are 80 items in the item bank.

Note

A1 = rational number;

A2 = related concepts of rational numbers;

A3 = axis;

A4 = addition and subtraction of rational numbers;

A5 = multiplication and division of rational numbers;

A6 = mixed operation of rational numbers.

Preliminary Test: Item Quality Monitoring

Participants

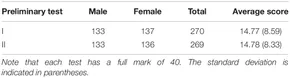

In the preliminary test, 296 students (145 males and 151 females) were conveniently sampled from six classes in grade seven of junior high school A1.Procedure

To avoid the fatigue effect, 80 items were divided into two tests (preliminary test I and preliminary test II, with 40 items in each test). All participants took part in the two tests. Each test lasted for 90 min, and the two tests were completed within 48 h.Analysis

Item difficulty and discrimination were computed based on the classical test theory. The differential item functioning (DIF) was checked using the difR package (version 5.0) (Magis et al., 2018) in R software.Results

A total of 296 students took the preliminary test. After data cleaning, 270 and 269 valid tests were collected in preliminary test I and preliminary test II, respectively. The effective rates of preliminary test I and preliminary test II were 91.22 and 91.19%, respectively. Table 4 presents the basic sample information and descriptive statistics of the raw scores. The distribution of the raw scores for the two tests was the same.

Key Information

Participants

| Male | Female | Total |

|---|---|---|

| 145 | 151 | 296 |

- Two tests with 40 items for each.

- Each test last for 90 mins.

- Two tests were completed within 48h.

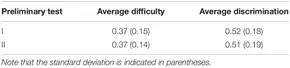

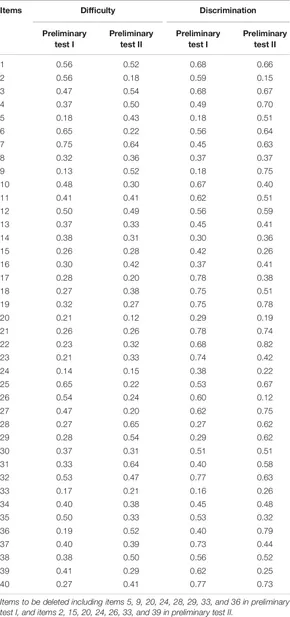

Table 5 presents the average difficulty and the average discrimination of the preliminary test (the difficulty and discrimination of each item are presented in Table 6). In classical test theory, item difficulty (i.e., the pass rate) is equal to the ratio of the number of people who have a correct response to the total number of people, and item discrimination is equal to the difference between the pass rate of the upper 27% of the group and that of the lower 27% of the group. In general, a high-quality test should have the following characteristics: (a) the average difficulty of the test is 0.5, (b) the difficulty of each item is between 0.2 and 0.8, and (c) the discrimination of each item is greater than 0.3. Based on the above three criteria, we deleted eight items in preliminary test I and seven items in preliminary test II.

Key Information

- What's difficulty?

The ratio of the number of people who have a correct response to the total number of people. - What's discrimination?

The difference between the pass rate of the upper 27% of the group and that of the lower 27% of the group.

The characteristics of a high-quality test:

- the average difficulty of the test is 0.5,

- the difficulty of each item is between 0.2 and 0.8, and

- the discrimination of each item is greater than 0.3.

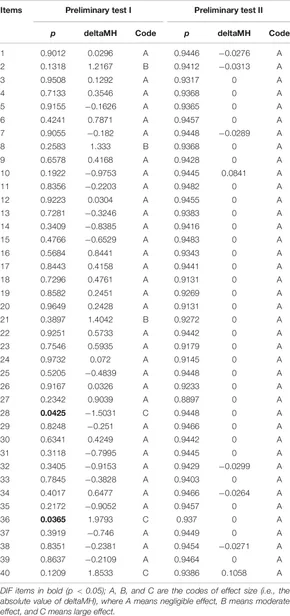

Table 7 presents the results of the DIF testing of the preliminary tests. DIF is an important index to evaluate the quality of an item. If an item has a DIF, it will lead to a significant difference in the scores of two observed groups (male and female) in the case of a similar overall ability. In the preliminary tests, the Mantel-Haenszel method (Holland and Thayer, 1986) was used to conduct DIF testing. Male is treated as the reference group, and female is treated as the focal group. The results indicated that items 28 and 36 in preliminary test I had DIF, and no item in preliminary test II had DIF. According to item difficulty and discrimination in the above analysis, these two items were classified as items to be deleted.

Key Information

- What's DIF?

An important index to evaluate the quality of an item. If an item has a DIF, it will lead to a significant difference in the scores of two observed groups (male and female) in the case of a similar overall ability.

The items with DIF will be deleted.

By analyzing item difficulty, item disefcrimination, and DIF, 65 items finally remained (including 32 items in preliminary test I and 33 items in preliminary test II) to form the final item bank. Among them, there are 3–5 candidate items corresponding to each of the 18 attribute patterns in the initial Q-matrix. Furthermore, based on the initial Q-matrix, three learning diagnostic tests with the same Q-matrix were randomly extracted from the final item bank to form the instrument of the formal tests: formal test A, formal test B, and formal test C.

Key Information

Final items bank contains 65 items, 32 in test 1, and 33 in test 2.

Q-matrix were randomly extracted to form the instrument of the formal tests.

- Test A

- Test B

- Test C

Formal Test: Q-Matrix Validation, Reliability and Validity, and Parallel Test Checking

It was possible that the initial Q-matrix was not adequately representative despite the level of care exercised. Thus, empirical validation of the initial Q-matrix was still needed to improve the accuracy of subsequent analysis (de la Torre, 2008). Although item quality was controlled in the preliminary test, it was necessary to ensure that these three tests, as instruments for longitudinal learning diagnosis, met the requirements of parallel tests. Only in this way could the performance of students at different time points be compared.

Key Information

Aims for Formal Test: Met the requirements of parallel tests.

Function: Compare the performance of students at different time points.

Participants

In the formal tests, 301 students (146 males and 155 females) were conveniently sampled from six classes in grade seven of junior high school B.Procedure

All participants were tested simultaneously. The three tests (i.e., formal tests A, B, and C) were tested in turn. Each test lasted 40 min, and the three tests were completed within 48 h.Analysis

Except for some descriptive statistics, the data in the formal test were mainly analyzed based on the LDMs using the CDM package (version 7.4-19) (Robitzsch et al., 2019) in R software. Including the model–data fitting, the empirical validation of the initial Q-matrix, the model parameter estimation, and the testing of reliability and validity were conducted. In the parallel test checking, the consistency of the three tests among the raw scores, the estimated item parameters, and the diagnostic classifications were calculated.

Key Information

Participants

| Male | Female | Total |

|---|---|---|

| 146 | 155 | 301 |

Software

CDM package in R software.

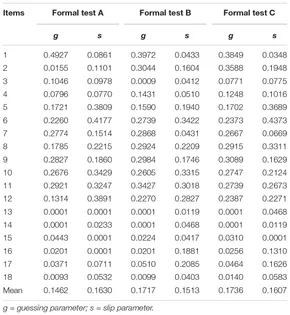

The deterministic-input, noisy “and” (DINA) model (Junker and Sijtsma, 2001), the deterministic-input, noisy “or” (DINO) model (Templin and Henson, 2006), and the general DINA (GDINA) model (de la Torre, 2011) were used to fit the data. In the model–data fitting, as suggested by Chen et al. (2013), the AIC and BIC were used for the relative fit evaluation, and the RMSEA, SRMSR, MADcor, and MADQ3 were used for the absolute fit evaluation. In the model parameter estimation, only the estimates of the best-fitting model were presented. In the empirical validation of the initial Q-matrix, the procedure suggested by de la Torre (2008) was used. In the model-based DIF checking, the Wald test (Hou et al., 2014) was used. In the testing of reliability and validity, the classification accuracy (Pa) and consistency (Pc) indices (Wang et al., 2015) were computed.

Key Information

Models used for fitting:

- The deterministic-input, noisy “and” (DINA) model (Junker and Sijtsma, 2001),

- the deterministic-input, noisy “or” (DINO) model (Templin and Henson, 2006), and

- the general DINA (GDINA) model (de la Torre, 2011)

Evaluation for fitting:

- Relative fit evaluation: AIC and BIC

- Absolute fit evaluation: RMSEA, SRMSR, MADcor, and MADQ3

The model-based DIF checking: the Wald test

Reliability and validity Testing: classification accuracy (Pa) and consistency (Pc) indices

- Results

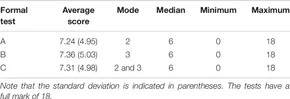

Descriptive statistics of raw scores

A total of 301 students took the formal test. After data cleaning, the same 277 valid tests (including those from 135 males and 142 females) were collected from each of the three tests; the effective rate of the formal tests was 93.57%. Table 8 presents the descriptive statistics of raw scores in the formal tests. The average, standard deviation, mode, median, minimum, and maximum of raw scores of the three tests were the same.

Key Information

| Male | Female | Total | |

|---|---|---|---|

| Participants | 146 | 155 | 301 |

| Valid | 135 | 142 | 277 |

- Model–data fitting

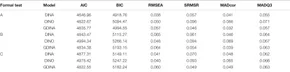

The parameters in an LDM can be interpreted only when the selected model fits the data. The fit indices presented in Table 9 provide information about the data fit of three LDMs, namely DINA, DINO, and GDINA, to determine the best-fitting model. Absolute fit indices hold that values near zero indicate an absolute fit (Oliveri and von Davier, 2011; Ravand, 2016). The result indices indicated that all three models fitted the data well. For relative fit indices, smaller values indicate a better fit. The DINA model was preferred based on the BIC, and the GDINA model was preferred based on the AIC. According to the parsimony principle (Beck, 1943), a simpler model is preferred if its performance is not significantly worse than that of a more complex model. Both AIC and BIC introduced a penalty for model complexity. However, as the sample size was included in the penalty in BIC, the penalty in BIC was larger than that in AIC. The DINA model was chosen as the best-fitting model given the small sample size of this study, which might not meet the needs of an accurate parameter estimation of the GDINA model, and the item parameters in the DINA model having more straightforward interpretations. Therefore, the DINA model was used for the follow-up model-based analyses.

Key Information

DINA, DINO and GDINA fitted very well.

DINA was chosen as the best-fitting model.

- Q-matrix validation

A misspecified Q-matrix can seriously affect the parameter estimation and the results of diagnostic accuracy (de la Torre, 2008; Ma and de la Torre, 2019). Notice that the Q-matrix validation can also be skipped when the model fits the data well. Table 10 presents the revision suggestion based on the empirical validation of the initial Q-matrix. In all three tests, the revision suggestion was only for item 9. However, after the subjective and empirical judgment of the experts (Ravand, 2016), this revision suggestion was not recommended to be adopted. Let us take item 9 (“Which number minus 7 is equal to −10?”) in formal test A as an example. Clearly, this item does not address the suggested changes in A3, A5, and A6. As the expert-defined Q-matrix was consistent with the data-driven Q-matrix, the initial Q-matrix was used as the confirmed Q-matrix in the follow-up analyses.

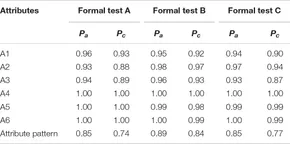

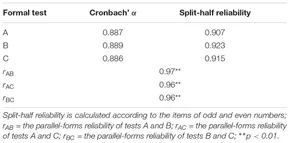

- Reliability and validity

Classification accuracy (Pa) and consistency (Pc) are two important indicators for evaluating the reliability and validity of classification results. According to Ravand and Robitzsch (2018), values of at least 0.8 for the Pa index and 0.7 for the Pc index can be considered acceptable classification rates. As shown in Table 11, both pattern- and attribute-level classification accuracy and consistency were within the acceptable range. Additionally, Cronbach’s α, split-half reliability, and parallel form reliability were also computed based on the raw scores (see Table 12). The attribute framework of this study was reassessed by several experts, and the Q-matrix was confirmed, indicating that the content validity and the structural validity of this study were good. To further verify the external validity, the correlation between the raw score of each formal test and the raw score of a monthly exam (denoted as S; the content of this test is the chapter on “rational numbers”) was computed (rAS = 0.95, p < 0.01; rBS = 0.95, p < 0.01; rCS = 0.94, p < 0.01). The results indicated that the reliability and validity of all three tests were good.

Key Information

(rAS = 0.95, p < 0.01; rBS = 0.95, p < 0.01; rCS = 0.94, p < 0.01)

- Parallel test checking

To determine whether there were significant differences in the performance of the same group of students in the three tests, the raw scores, estimated item parameters (Table 13), and diagnostic classifications (Table 14) were analyzed by repeated measures ANOVA. The results indicated no significant difference in the raw scores [F(2,552) = 1.054, p = 0.349, BF10 = 0.0382], estimated guessing parameters [F(2,34) = 1.686, p = 0.200, BF10 = 0.463], estimated slip parameters [F(2,34) = 0.247, p = 0.783, BF10 = 0.164], and diagnostic classifications [F(2,78) ≈ 0.000, p ≈ 1.000, BF10 = 0.078] in the same group of students in the three tests.

As the three tests examined the same content knowledge, contained the same Q-matrix, had high parallel-forms reliability, and had no significant differences in the raw scores, estimated item parameters, and diagnostic classifications, they could be considered to meet the requirements of parallel tests.

Conclusion and Discussion

This study developed an instrument for longitudinal learning diagnosis of rational number operations. In order to provide a reference for practitioners to develop the instrument for longitudinal learning diagnosis, the development process was presented step by step. The development process contains three main phases, the Q-matrix construction and item development, the preliminary test for item quality monitoring, and the formal test for test quality control. The results of this study indicate that (a) both the overall quality of the tests and the quality of each item are good enough and that (b) the three tests meet the requirements of parallel tests, which can be used as an instrument for longitudinal learning diagnosis to track students’ learning.

However, there are still some limitations of this study. First, to increase operability, only the binary attributes were adopted. As the binary attribute can only divide students into two categories (i.e., mastery and non-mastery), it may not meet the need for a multiple levels division of practical teaching objectives (Bloom et al., 1956). Polytomous attributes and the corresponding LDMs (Karelitz, 2008; Zhan et al., 2020) can be adopted in future studies. Second, the adopted instrument for longitudinal learning diagnosis was based on parallel tests. However, in practice, perfect parallel tests do not exist. In further studies, the anchor-item design (e.g., Zhan et al., 2019) can be adopted to develop an instrument for longitudinal learning diagnosis. Third, an appropriate Q-matrix is one of the key factors in learning diagnosis (de la Torre, 2008). However, the Q-matrix used in the instrument may not strictly meet the requirements of identification (Gu and Xu, 2019), which may affect the diagnostic classification accuracy.